The quality of current time series data is often inadequate for analytics for maintenance optimization. An automated methodology is therefore needed to evaluate and correct time series data.

This task is often not anchored in organizations because it was not necessary for the previous, sporadic and purely visual use of data. The methodology must master the trade-off between quality and coverage. It must run automatically and has to be integrated into a data quality process.

Poor data quality is an obstacle for preventive maintenance (CMB, PDM)

No matter which analytical procedures are used to optimize maintenance processes, the quality of the input data is crucial for the reliability of the results. High data quality is therefore a necessary condition for the acceptance of analytical approaches.

Data sets on the operating loads of fleets and systems are usually available. In some cases they also cover long periods of time. However, this time series data is only viewed or evaluated on a case-by-case basis. Therefore, they are generally affected by quality defects. This is acceptable for purely visual inspection. However, automated data processing requires high data quality because even individual deviations can severely distort the result. The data quality must therefore be 100% checked and, if necessary, corrected.

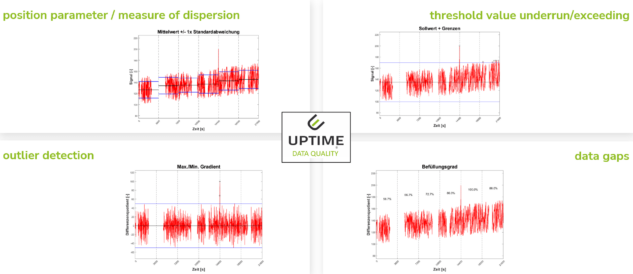

Checking time series data: a quality gate

The demand for high quality arises from the need to avoid incorrect analysis results. Data sets are therefore first assessed. For larger systems and fleets, this is only realistic as an automated process. A combination of criteria such as mean value, spread, threshold value, outliers and degree of filling has proven successful. The intended analysis procedures provide the limit values for these quality criteria.

Data sets are then released or rejected based on these parameters. As a result, this quality gate provides the quantitative assessment of the data sets and reporting that specifies the focus for improving data collection.

The correction of time series data

The rejection of poor quality data sets quickly leads to a lack of coverage of the load history, which also undermines the quality of the results of the analysis. Correcting data sets is intended to prevent this. In general, data corrections are only permitted if they do not distort the analysis results. This can be prevented by deciding which methods to use on a channel-by-channel basis always referring to the underlying analysis algorithms. For example, it is permissible to interpolate a slow temperature channel. This can happen up to a length of the characteristic cooling time, because within this interval no relevant fluctuation can occur due to the existing thermal inertia. However, this procedure is prohibited for volatile channels that may contain switching events, for example.

Proper data correction therefore requires knowledge of the possible load responses of the monitored system and an overview of the analytics requirements. Load responses emerge from the available monitoring data. For the analytic requirements, a specific definition of analytical goals is essential. But there must be one in CMB/PDM projects anyway.

To ensure comparability of the results over longer periods of time, the evaluation criteria, limit values and correction algorithms are kept constant. The corrected data is marked, the raw data remains saved. This ensures traceability.

The data process

The checking and correction of data must be established as part of a data quality process. It is the prerequisite for avoiding incorrect results and thus for high user acceptance. However, because implementing data quality is laborious and costly, a clear focus on risk-, cost drivers must be set.

You are currently viewing a placeholder content from Facebook. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More Information